Autonomous vehicle companies use simulators to train their self-driving systems and teach them how to react to “agents” — things like pedestrians, cyclists, traffic signals and other cars. To have a truly advanced AV system, those agents need to behave and react realistically to the AV and to each other.

Creating and training intelligent agents is one of the problems Waymo is trying to solve, and it’s a common challenge in the world of AV research. To that end, Waymo on Thursday launched a new simulator for the AV research community that provides an environment in which to train intelligent agents, complete with prebuilt sim agents and troves of Waymo perception data.

“Traditional simulators have predefined agents often, so someone wrote the script on how the agent is supposed to behave, but that’s not necessarily how they behave,” Drago Anguelov, head of research at Waymo, told TechCrunch during a video interview.

“In our case, what this simulator is paired with is a large dataset of our vehicles observing how everyone in environments behave. By observing how everyone behaves, how much can we learn about how we should behave? We call this a stronger imitative component, and it’s the key to developing robust, scalable AV systems.”

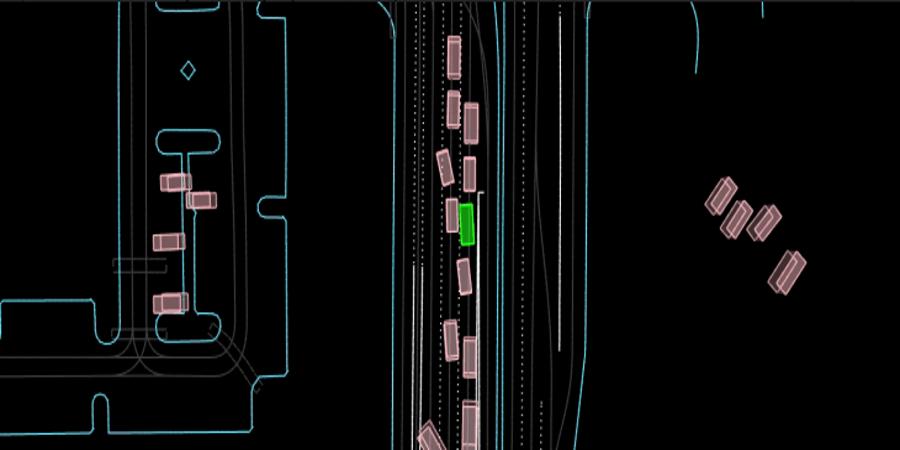

Waymo says the simulator, dubbed Waymax, is “lightweight,” to allow researchers to iterate quickly. By lightweight, it means that the simulation isn’t fully fleshed out with realistic-looking agents and roads. Rather, it shows a rough representation of a road graph, and the agents are portrayed as bounding boxes with certain attributes built in. It’s basically a more cleaned up environment that allows researchers to focus more on complex behaviors among multiple road users than on how agents and the environment looks, says Anguelov.

The simulator is now available on GitHub but cannot be used for commercial purposes. Rather, it’s part of Waymo’s larger initiative to give researchers access to tools — like its Waymo Open Dataset — that can help accelerate autonomous vehicle development.

Waymo says it can’t view the work that researchers create using Waymax, but that doesn’t mean the Alphabet-owned AV company doesn’t stand to gain from sharing its tools and data.

Waymo regularly hosts challenges for researchers to help solve problems relevant to AVs. In 2022, the company organized one such challenge called “Simulated Agents.” Waymo populated a simulator with agents and tasked researchers with training them to behave realistically in relation to its test vehicle. While the challenge was underway, Waymo realized it didn’t have a robust enough environment set up in which to train the agents. So Waymo collaborated with Google Research to jointly develop a more suitable environment that can run in a closed-loop fashion, or one in which the behavior of the system is continually monitored and tweaked to create meaningful outcomes.

Which is how Waymo got to Waymax.

Anguelov says Waymo will likely rerun that challenge next year using the new simulator. These types of challenges allow the company to see how advanced the AV industry is on certain problems — like multi-agent environments — and see how Waymo’s tech compares.

“The Waymo Open Dataset and these simulators are our way to steer the academic or research discussion towards directions we think are promising, and then we will look forward to seeing what people will develop,” said Anguelov, noting that these challenges also help to attract attention, and therefore talent, to the field of AV and robotics research.

The researcher also said the Waymax simulator could help unlock improvements in reinforcement learning, which can lead to AV systems displaying emergent behavior. Reinforcement learning is a machine learning term example where an agent learns to make decisions by interacting with an environment and receiving feedback in the form of rewards or penalties for each action it takes — similar to how humans move through the world. In the case of agents, a simulated pedestrian might receive a reward for not walking into another pedestrian, for example.

Anguelov says this can lead to emergent behavior, or behavior that a human wouldn’t necessarily display, such as different types of lane changes or even many vehicles agreeing to drive consistently if they recognize each other as AVs. The result could be safer autonomous driving.

Source @TechCrunch