Hiya, folks! Welcome to our weekly AI newsletter. If you want to receive updates like this every Wednesday, sign up here.

Just a few days ago, OpenAI revealed its new AI model, o1. This model takes its time to think before answering questions, breaking down problems and checking its answers. It’s not perfect, but it excels in some areas like physics and math.

This has implications for AI regulation. California has proposed a bill that requires AI models that cost over $100 million to develop or use excessive computing power to be safe. But o1 shows that scaling up training compute isn’t the only way to improve performance.

According to research manager Jim Fan, future AI systems might use small, easy-to-train “reasoning cores” instead of large, complex models. Recent studies have shown that small models like o1 can outperform large models with more time to think.

Sara Hooker, the head of AI startup Cohere’s research lab, believes that policymakers should reconsider their approach to AI regulation. She thinks that tying AI regulatory measures to compute is short-sighted and doesn’t consider everything you can do with inference or running a model.

Top news:

* OpenAI’s o1 model demonstrates its capabilities in physics and math, despite not having more parameters than previous models.

* Intel is partnering with AWS to develop an AI chip using Intel’s 18A chip fabrication process.

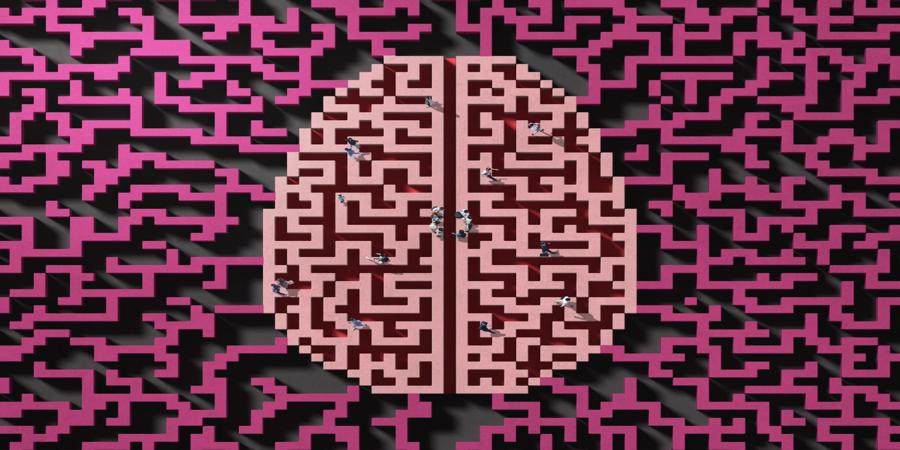

* Microsoft is releasing a new AI benchmark called Eureka, which tests a model’s visual-spatial navigation skills.

* California has passed two laws restricting the use of AI digital replicas of performers.

Recent experiments have shown that AI can help reduce beliefs in conspiracy theories. For example, a chatbot was used to gently offer counterevidence to people who believed in conspiracy theories, leading to a 20% reduction in belief two months later.